AI ML basic

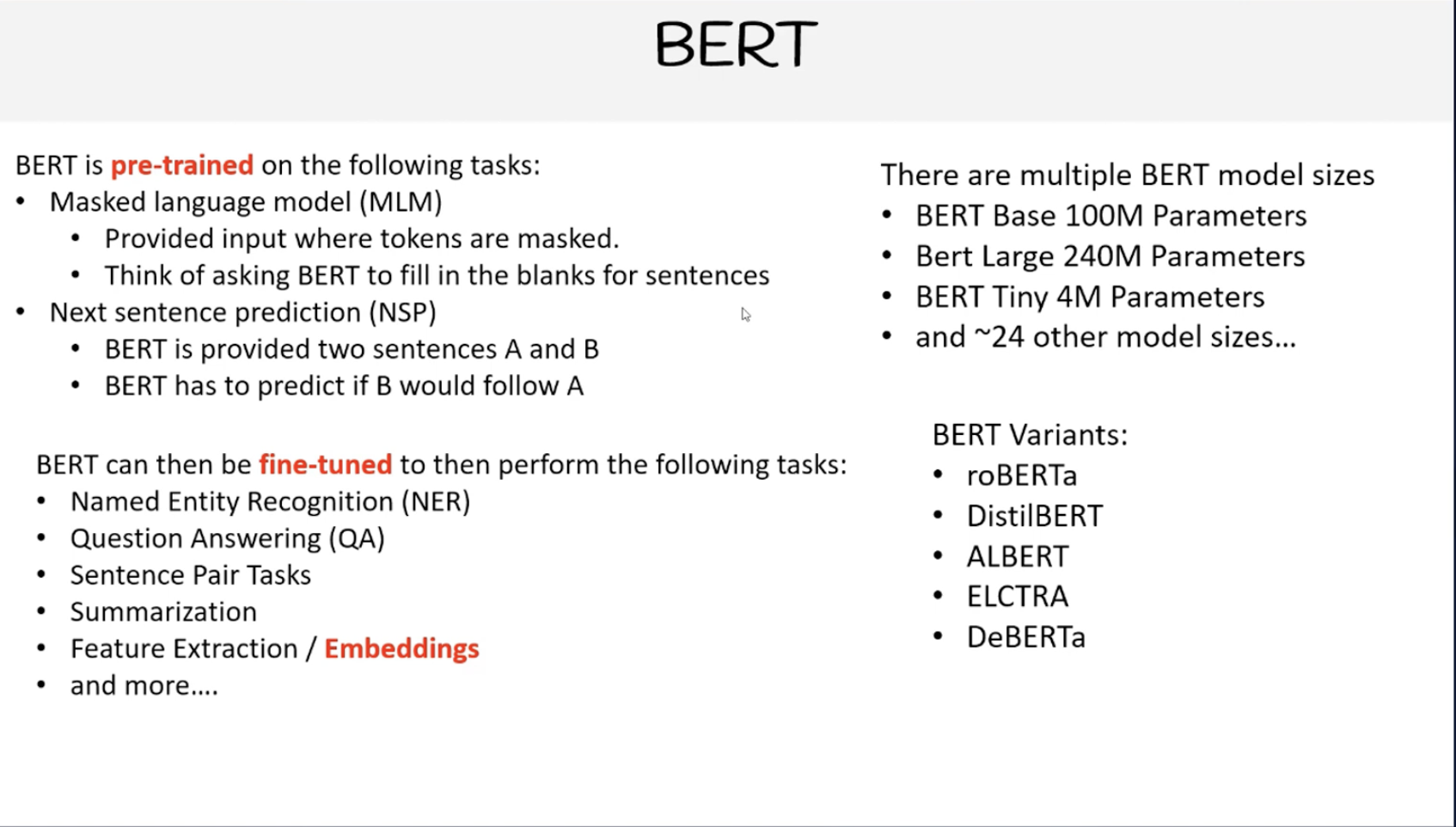

Bert

The BERT model was proposed in BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding by Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova. It’s a bidirectional transformer pre-trained using a combination of masked language modeling objective and next sentence prediction on a large corpus comprising the Toronto Book Corpus and Wikipedia.

Sentence Transformers

SentenceTransformers is a Python framework for state-of-the-art sentence, text and image embeddings. The initial work is described in our paper Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. It provides a simple interface for computing embeddings for sentences, paragraphs and images.

AI vs Gen Ai

Gen Ai is a subset of AI that focuse creating new content of data that is novel and realstie

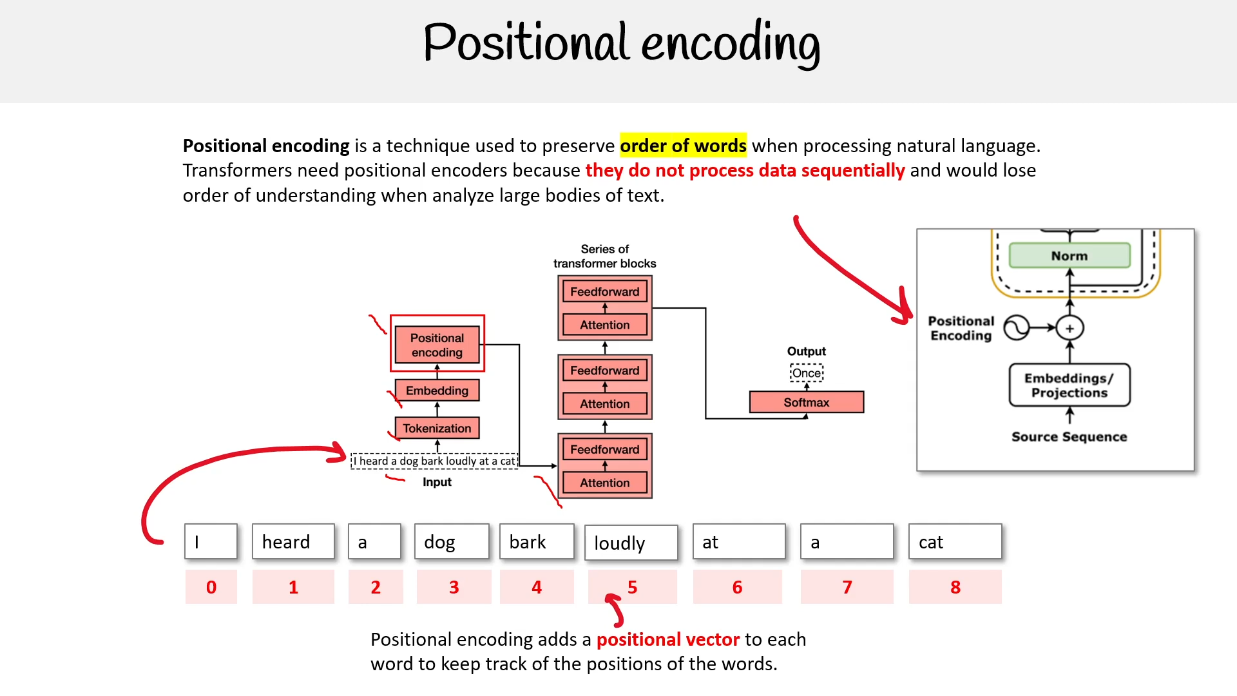

Positional Encodings

Transoformer Archticure

%201.png)

Tokenization NLP

.png)

Foundational Model